Introduction

The introduction of AlexNet back in 2012 can be considered one of the breakthrough events that triggered the ongoing evolution of the deep learning (DL) subdomain of artificial intelligence. In that year, AlexNet greatly outperformed competing algorithms by a large margin during the ImageNet challenge, a competition to algorithmically identify objects, such as cats or dogs, in images. AlexNet belongs to the class of so-called convolutional neural networks (CNN), a type of artificial neural network tailored to process image data. Although its components were not entirely new, AlexNet was one of the first neural networks to heavily utilize Graphics Processing Units (GPUs) to massively accelerate its learning abilities, while other neural networks still relied on much slower conventional Central Processing Units (CPUs). The success of AlexNet drew attention to the entire field, leading to a constant influx of funding and skilled researchers and engineers. As a result, the number of neural network architectures solving a variety of problems has exploded in recent years. Naturally, it is hard to keep track of these developments, and curated knowledge remains scarce. Hence, in this post, I want to briefly introduce five common image analysis tasks for which neural networks can be used. This post is aimed at people with little to no knowledge about DL, but who are considering carrying out a DL project as part of their work or research.

Image Analysis Tasks

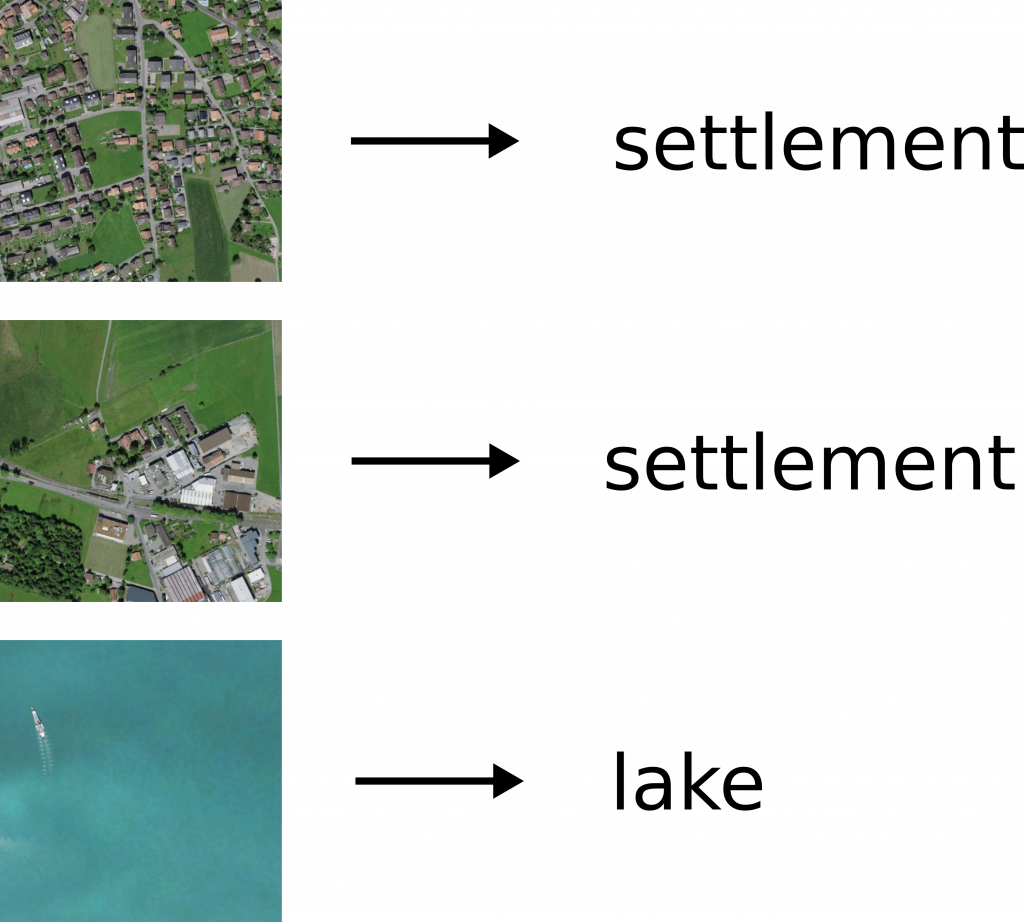

First, one of the most straightforward computer vision problems is Image Classification, the very task in which AlexNet originally excelled. In Image Classification, the algorithm’s challenge is to determine the single object depicted in an image. Classical examples of these objects include animals like cats or dogs, but virtually any object can be identified as long as it is unambiguously visible in the input image. For instance, when provided with earth observation data, a single image can be categorized based on the depicted land cover, such as a lakes or a settlements (see Figure 1). Typical architectures for Image Classification are AlexNet, VGGNet or ResNet.

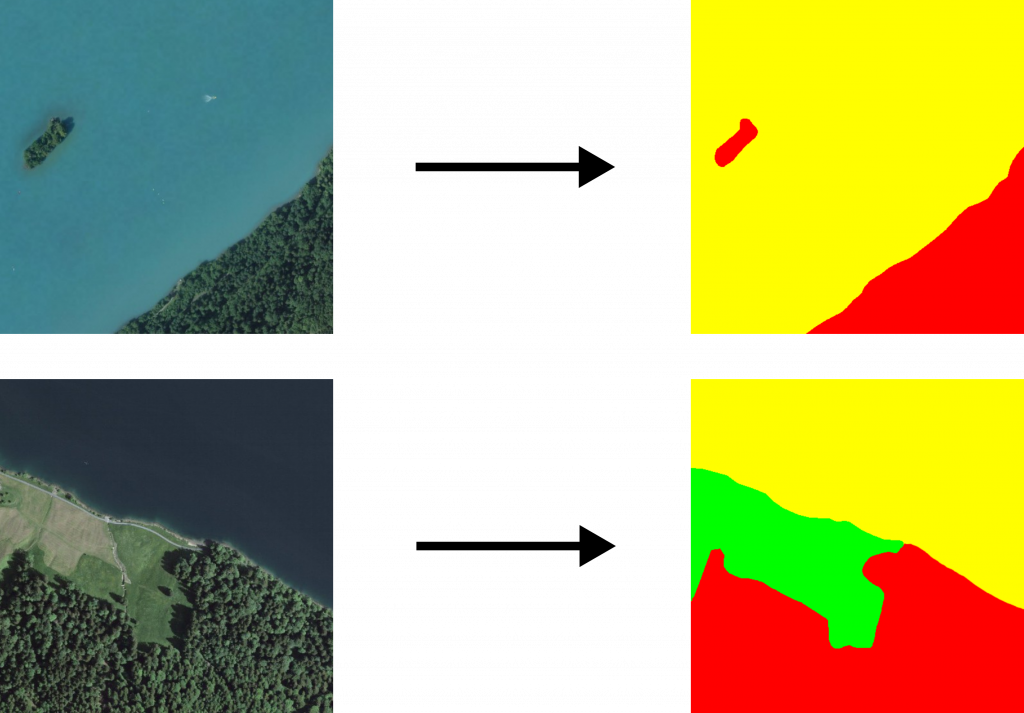

Second, Image Segmentation represents an extension of the Image Classification problem. While the input is still an image, the task is not to output a single category but rather another image in which each pixel holds a value representing the class at that location. This means that the output typically consists of multiple classes, including one or more for the objects to be segmented and another one for the background. In practice, neural networks can handle virtually any number of classes. Figure 2 provides examples of how satellite images can be segmented according to the depicted land cover types. Typical neural network architectures for this task are U-Net, SegNet and PSPNet.

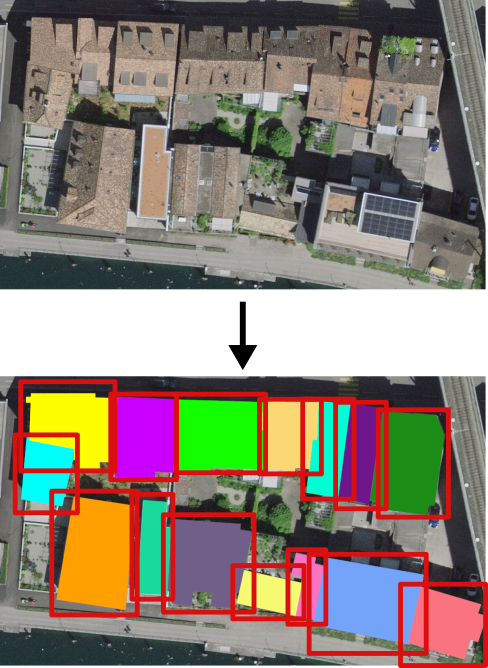

Third, while Image Classification deals with determining the presence of a certain class in an image and Image Segmentation deals with determining which pixels belong to that class, Instance Detection, also often referred to as Object Detection, focuses on detecting and localizing multiple distinct objects of the same class within a single image. Instances can include countable objects like humans, cars, or trees. Instance Detection models output a set of bounding boxes, each being assigned to a separate instance. Common architectures for Instance Detection are RetinaNet and the Yolo family of architectures.

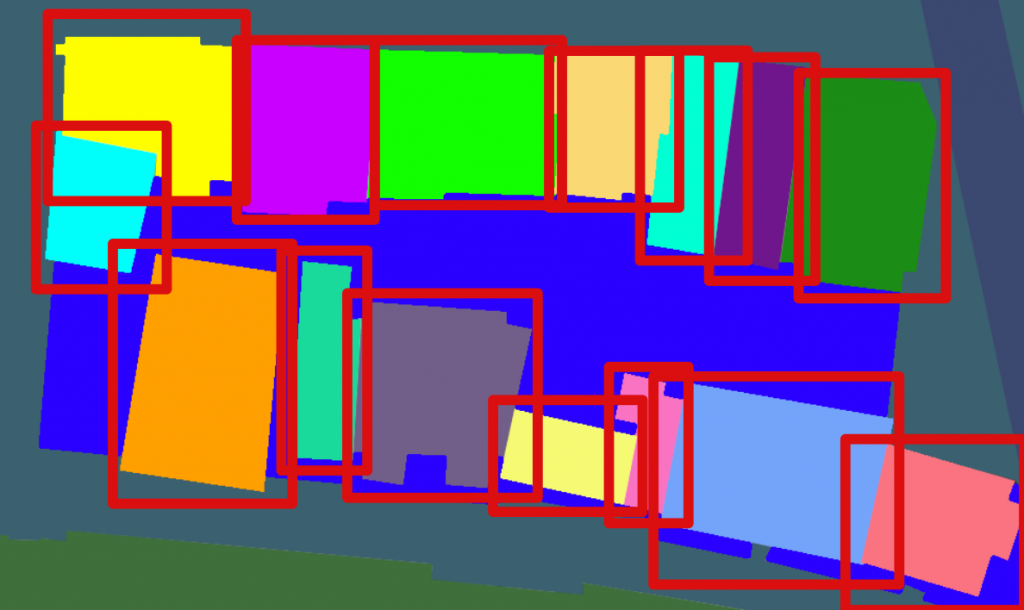

Fourth, Instance Segmentation extends Instance Detection by applying Image Segmentation to each instance. Therefore, in addition to generating bounding boxes, a segmentation map is produced for each bounding box. Instance Segmentation is particularly valuable in scenarios where multiple instances touch or overlap, as Image Segmentation might merge separate instances into a single entity. An example illustrating the usefulness of Instance Segmentation is shown in Figure 4, where adjacent buildings are extracted as distinct instances. Typically, one of the models of the RCNN family is used for this task.

Fifth, Panoptic Segmentation represents an extension of Instance Segmentation by dividing an image into countable objects (e.g., buildings, ships, trees) and uncountable regions (e.g., the ocean, the sky). The goal is to associate each pixel in the image with a distinct entity, avoiding the use of a generic ‘background’ class. Countable objects are marked with bounding boxes and associated segmentation maps, similar to Instance Segmentation, while uncountable regions are represented by pixel values. For Panoptic Segmentation, architectures like UPSNet or EPSNet can be used.

Variations

From a technical perspective, an image is a 3-dimensional matrix—a regular grid of numbers with a specific height, width, and number of channels. In a typical image, there can be 1 channel (grayscale), 3 channels (red, green, and blue components), or 4 channels (red, green, blue, and transparency). All these image formats are valid inputs for a CNN. However, CNNs can handle a virtually unlimited number of channels. This means that more complex imagery, such as data from the Sentinel 2 Earth observation mission with 12 different channels, can also be analyzed by a CNN as well. These channels don’t even necessarily have to represent color spectra; they could represent entirely different concepts like elevation, temperature, or population density. Similarly, there is flexibility in the outputs. While the examples mentioned above are valid for various feature extraction tasks, it is also possible to extract entirely different information from imagery or gridded data in general. For instance, instead of classifying images, the output can be adapted to yield a continuous value ranging from 0 to 1. This value might represent the degree of destruction of a town due to a natural disaster, where 0 indicates no damage and 1 indicates complete destruction. A similar approach has been implemented as a variation of Image Segmentation, using a modified U-Net, to obtain continuous values representing shaded relief.

Conclusion

This post has discussed five common image analysis tasks to which neural networks can be applied, along with potential variations. If you are considering undertaking a deep learning project, there are excellent resources available to help you learn the fundamentals, such as deeplearning.ai by Andrew Ng. If you want to get started with a hands-on image segmentation project for aerial imagery, I have a tutorial series on this topic available as a set of blog posts starting here. I wish you good luck and a lot of fun on your deep learning journey!